As part of supporting a Chromium runtime for chromecast (as defined below), the CmaBackendProxy class and related code contained in the chromecast/media/cma/backend/proxy/ directory was added. This infrastructure exists to proxy audio data across the CastRuntimeAudioChannel gRPC Service without impacting local playback of video or audio media, and requires significant complexity in order to do so. This document provides a high-level overview of the CmaBackendProxy implementation and the pipeline into which it calls. It is intended to be used as a reference for understanding this pipeline, to simplify both the code review and the onboarding process.

The chromecast pipeline, as exists today in Chromium's chromecast/ directory, is rather complex, so only the high-level will be discussed here. When playing out media, an implementation of the MediaPipelineBackend API will be created, and this will be wrapped by a platform-specific (e.g. Android, Fuchsia, Desktop, etc…) CmaBackend as exists today in the Chromium repo. The CmaBackend will create an AudioDecoder and VideoDecoder instance as needed, which is responsible for operations such as playback control, queueing up media playout, and decrypting DRM (if needed), then passing these commands to the MediaPipelineBackend.

gRPC is a modern open source high performance RPC framework that can run in any environment. It can efficiently connect services in and across data centers with pluggable support for load balancing, tracing, health checking and authentication. gRPC was chosen as the RPC framework to use for the Chromium runtime to simplify the upgrade story for when both sides of the RPC channel can upgrade independently.

A runtime to be used by hardware devices to play out audio and video data as received from a Cast session. It is expected to run on an embedded device.

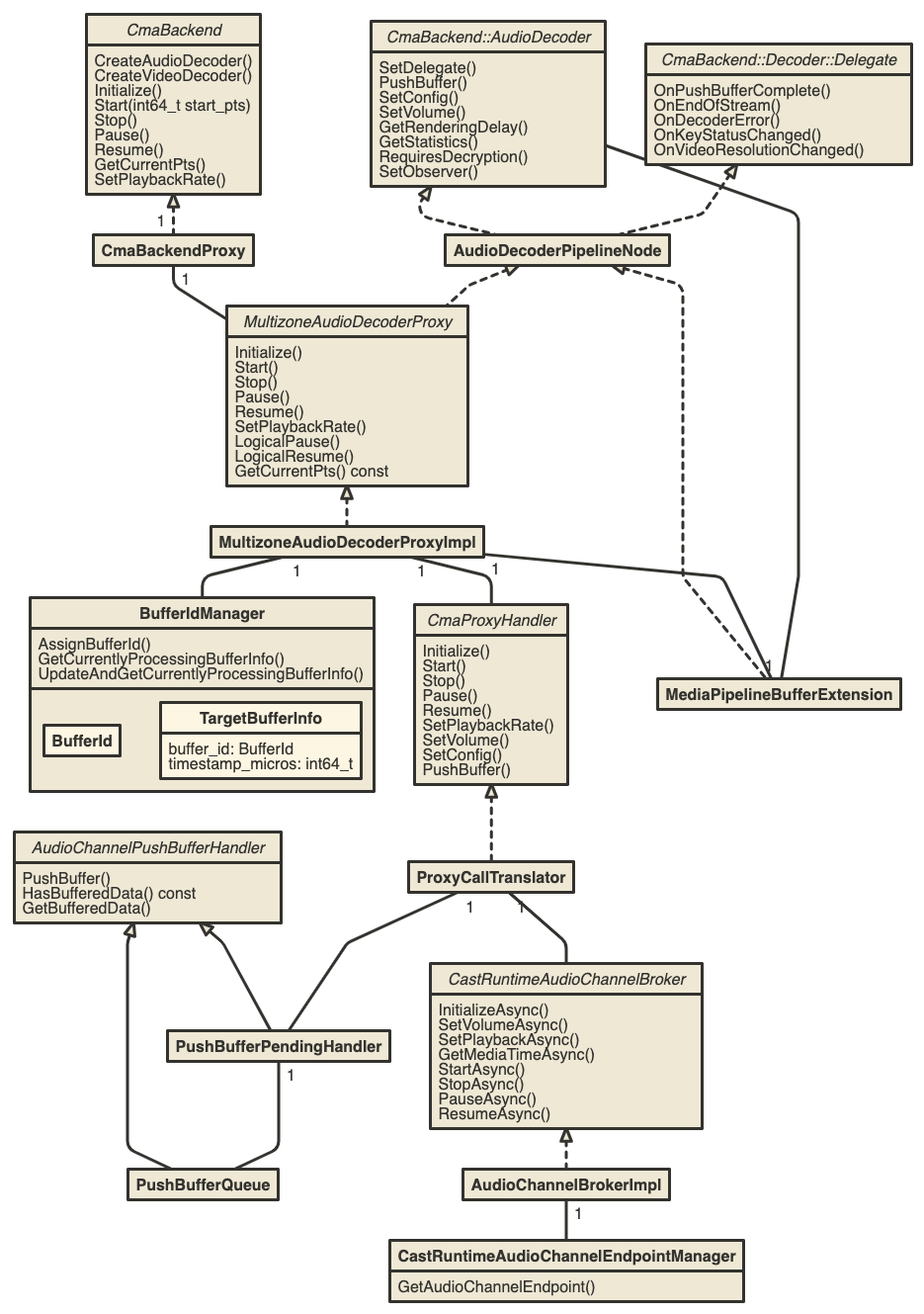

At a high level, the CmaBackendProxy (“proxy backend”) sits on top of another CmaBackend, which for the purposes of this document will be referred to as the delegated backend. The proxy backend acts to send all audio and video data to the delegated backend on which it sits, while also sending the same audio to the CastRuntimeAudioChannel gRPC Channel used to proxy data, along with synchronization data to keep playback between the two in-line. The architecture used for this pipeline can be seen as follows:

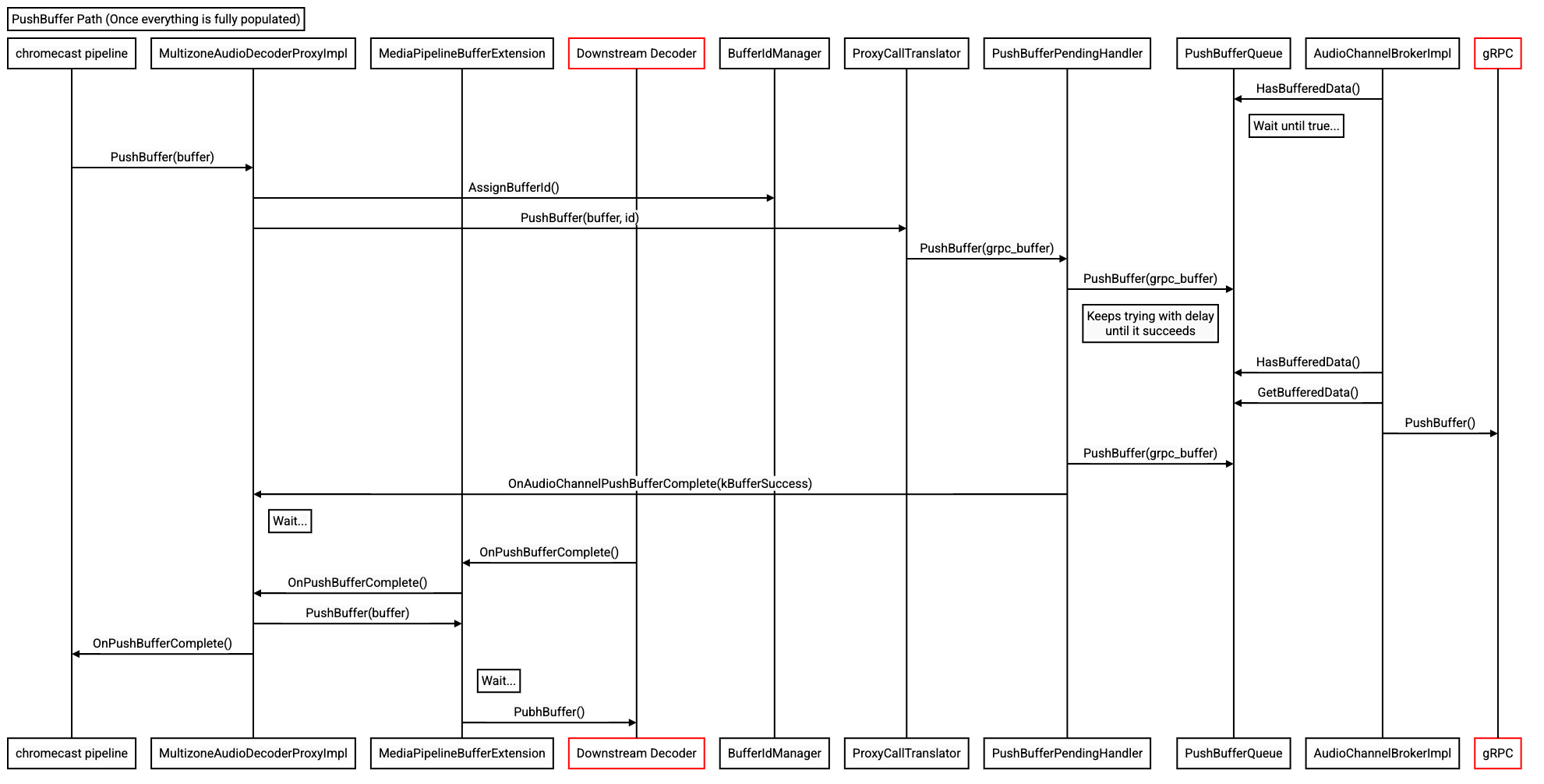

The pipeline can be roughly divided into two parts:

CmaBackendProxy gets audio and video data it receives to the platform-specific MediaPipelineBackend, resulting in playout of the local device's speakers .Both can be summarized in this diagram, with further details to follow:

The CmaBackendProxy, by virtue of being a BackendProxy, can create an AudioDecoder and a VideoDecoder. VideoDecoder creation and all video processing is delegated to the underlying delegated backend with no changes, so it will not be discussed further. The AudioDecoder is more interesting - there are 3 CmaBackend::AudioDecoder types relevant in this architecture through which audio data will pass - two proxy specific and the delegated backend's decoder. In processing order, they are:

MultizoneAudioDecoderProxy: This class is the starting-point for sending data over the CastRuntimeAudioChannel gRPC, using the pipeline described in the following section.MediaPipelineBufferExtension: This class performs no processing itself, but instead just acts to store the data from PushBuffer calls locally, so that an extra few seconds of data may be queued up in addition to what the local decoder stores.CmaBackend::AudioDecoder for this specific platform, as described above. This is owned by the Delegated backend itself.All audio data passes through these three layers so that it may be played out locally. For local playback as described here, this functionality is for the most part uninteresting - by design, the end user should notice no difference in audio playout. At an architecture level, the only noticeable difference for an embedder is that extra data is queued locally in the MediaPipelineBufferExtension.

As an abstraction on top of the above, the AudioDecoderPipelineNode was introduced as a parent for MultizoneAudioDecoderProxy and MediaPipelineBufferExtension, though its existence can for the most part be ignored. All functions in this base class act as pass-through methods, directly calling into the lower layer's method of the same name. Meaning that this class functions purely for software-engineering reasons, as a way to reduce code complexity of the child classes.

The MultizoneAudioDecoderProxy, as mentioned above, functions to proxy data across the CastRuntimeAudioChannel gRPC channel. The pipeline used to do uses the following classes, in order:

MultizoneAudioDecoderProxyImpl: As mentioned above, all CmaBackend::AudioDecoder methods call into here first, as well as methods corresponding to the public calls on CmaBackend. This class mostly acts as a pass-through, immediately calling into the below for all above method calls, although the BufferIdManager owned by this class adds a bit of complexity, in that it assigns Buffer Ids to PushBuffer calls, to be used for audio timing synchronization between both sides of the CastRuntimeAudioChannel gRPC channel.ProxyCallTranslator: As the name suggests, this class exists to convert between the media-pipeline understood types, as called into the above layer, with those supported by CastRuntimeAudioChannel gRPC.To this end, PushBufferQueue helps to turn the “Push” model used by the CmaBackend::AudioDecoder‘s PushBuffer() method into a “Pull” model that better aligns with the gRPC async model. The PendingPushBufferHelper works with the above, to support returning a kBufferPending response as required by the PushBuffer() method’s contract. In addition, this class is also responsible for handling threading assumptions made by the above and below classes, such as how data returned by the below layer must be processed on the correct thread in the above layer.CastRuntimeAudioChannelBroker: Implementations of this abstract class take the CastRuntimeAudioChannel gRPC types supplied by the above, then use them to make calls against the gRPC Service.In order to support varied user scenarios, a number of build flags have been introduced to customize the functionality of this pipeline:

This flag enables or disables support for sending data across the CastRuntimeAudioChannel. When enabled, the CmaBackendFactoryImpl class will wrap the implementation-specific CmaBackend with a CmaBackendProxy instance, as described above. This flag defaults to False.